Database Migrations for a Project without Entity Framework Core

Without getting into the specifics, here's an outline of a problem we were trying to solve: DevOps needs to be able to perform migrations from our .NET projects. As is common practice, services use a staging, UAT and production database, and that means every change to the database schema must be replicated across all three. Usually that's not a problem, as we can just use Entity Framework migrations, but in the case of one particular service, a custom data layer was being used instead of Entity Framework Core.

So, what to do? We came up with four possible short-term fixes:

Add a migrations project to the repository.

Add Entity Framework, DbContext and models on top of the existing code.

Use DbUp.

Add a SQL Server Database Project to the repository.

Adding an Entity Framework project

The first solution I'd already added implemented, and it's usable by a DevOps team with minimal effort - it's just a matter of installing Entity Framework Core CLI and running a few commands (literally two or three) in PowerShell to get whatever files are needed, whether that's a migration, multiple .sql files, a bundle, etc.

The advantage of this is it's not dependent on anything other than Entity Framework components, a DbContext and a model, since all it needs is a schema. And it wouldn't affect the service during runtime. The only disadvantage I could think of would that it's not obvious to other developers that the model needs to be updated to reflect any changes to the main project.

Modifying the data layer

The second option - again my idea, but not the most elegant - would be to add Entity Framework and another DbContext on top of the existing data layer. Advantage: DevOps should be able to generate migrations and scripts from the main project, as with the other services, and I'll be refactoring the code anyway. Disadvantage: The codebase might become a lot more complicated and messy, and it comes with the risk of breaking something.

DbUp

The third option, suggested by another engineer, was to use something called DbUp.

DbUp might be a solution for a project that doesn't use Entity Framework.

The code I've seen would execute, and attempt to update the database, whenever the service starts. This would probably happen just once after a new version is deployed, but a service shouldn't have permissions to modify the schema on the target database.

Changes need to be defined in SQL scripts instead of a model. Would this really be a migration?

DbUp is third-party software, but is being maintained and has over 80 contributors.

Deprecation and breaking changes are common problems when using third-party software, and, as I expected, dbup had indeed been deprecated and we'd need to use dbup-sqlserver instead.

Migrations Using Visual Studio SQL Server Database Project

We decided on a fourth solution, which is to add a SQL Server Database Project to the solution. I'm not sure how that's better than my idea for creating the EF Core project and running a few commands that would generate everything needed, but apparently it is from the DevOps perspective.

Add a new project to the Visual Studio solution. This project will initially be empty, without assembly references or scaffold files - it appears everything being used is native to Visual Studio.

To this we need to add the connection to the database the schema is to be imported from. There will be a menu option for 'Import' -> 'Database'. After the connection is made, the project will be populated by a SQL script for each table, and, by default, a few other scripts to apply or update security policies for the database. It is preferable to remove (don't delete) the Security directory from the project.

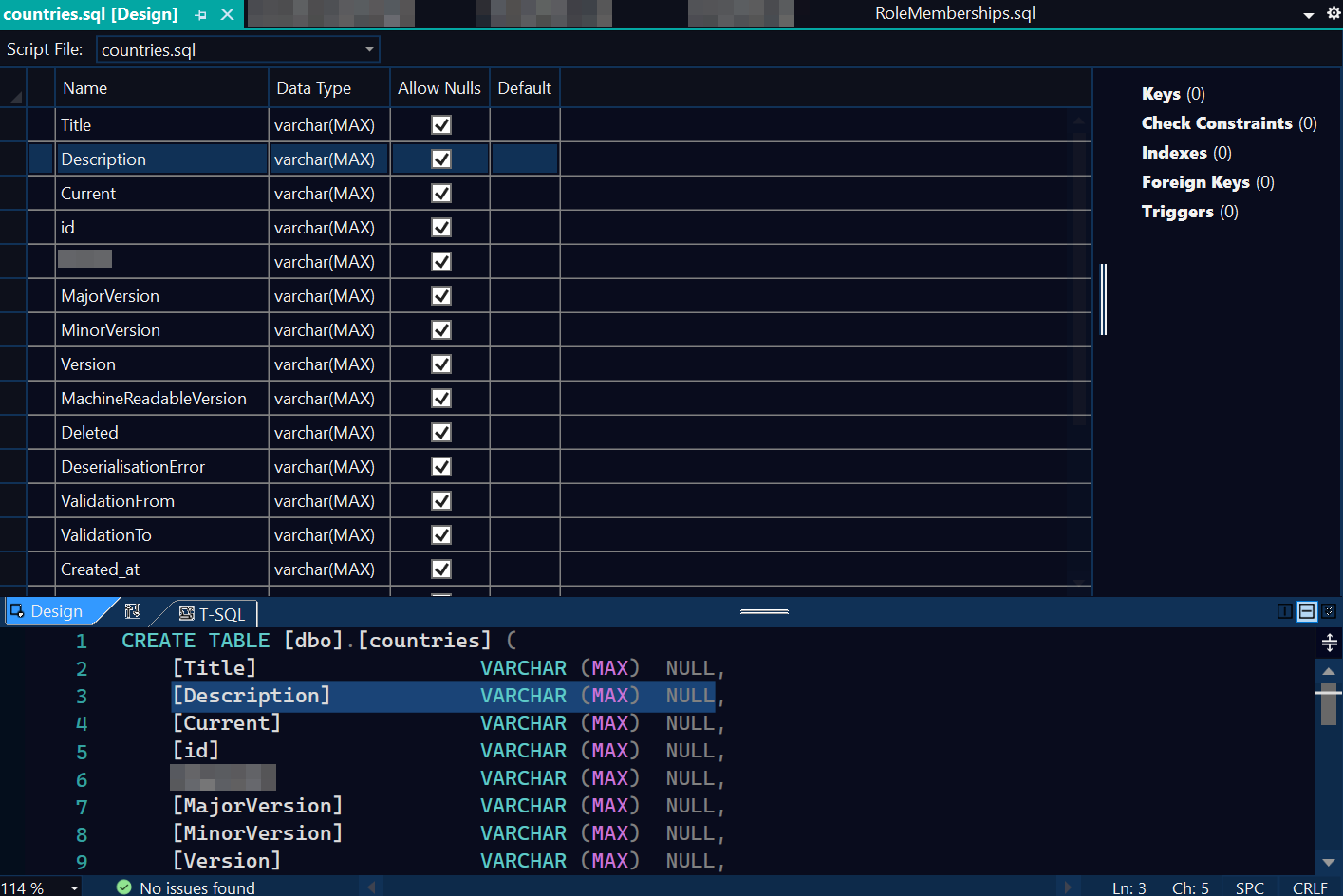

If we double-click on a given script, the main window will display the script and designer views. Either one could be used for modifying the schema and/or fields. Another view of a given database table can be displayed in the SQL Server Object Explorer window, if we select 'View in Object Explorer'.

After changes are made, Visual Studio can display a comparison between the local schema and that on the target database, using 'Schema Compare...'. This might take a few moments, if it's running through a large number of tables and .sql files.

Before running any of these, it's probably a good idea to make a backup of the existing database.

There are four ways I can see of using this project to update the database:

The script(s) can be run manually on the database server.

We can build/rebuild the project, which will generate a DACPAC file in /bin/Debug - this doesn't appear to work in SQL Server Management Studio v18, but I'm guessing the aim here is to use the file in some automated Azure process.

The project can be configured to also generate a SQL script during a build, but that contains everything under the dbo directory.

The Publish feature will attempt to run the scripts on a target database server to push the schema update.

While the scripts listed in Solution Explorer are CREATE TABLE scripts, the ones generated in the Schema Compare run the ALTER TABLE command.

I have done some cursory testing, by attempting various updates to a couple of the tables on the target database. For one of the tables I changed the data type of the id field to int, and this failed because the new data type was incompatible with the existing data, and a forced update would have most likely caused data loss. There will be an exception for any operation that's likely to result in a loss of data. It is possible to override these checks in the Schema Compare Options, if the current settings are blocking the desired changes.

Next, I added a column called 'ExtraTest' to both tables, and used the Update option in the Schema Compare window to push the update. The columns were added successfully without any loss of data.